Just two months after its initial release, OpenAI’s ChatGPT reached 100 million users, making it the fastest-growing consumer application in history according to Reuters. In the months since, we have seen AI strategy rise to a top priority among business leaders: More than half of CXOs now list “Generative AI and LLMs” among their top five priorities, according to our latest Battery Ventures Cloud Software Spending survey.

But the growing adoption of AI has also brought an increased scrutiny and awareness of the potential risks posed by these systems. The ‘black box’ nature of deep-learning models makes it difficult for regulators and consumers to trust what they don’t understand.

Incidents of LLM misuse have already made headlines in the last few months. Some examples include Samsung’s ban of ChatGPT after an employee leaked sensitive code and the class-action lawsuit filed against a popular AI art generator, Lensa AI, alleging that the app collected users’ biometric data without permission.

As businesses are moving quickly to incorporate AI into their products, they are under pressure to do so ethically and transparently from regulators who have closely followed the rise of AI. Policymakers worldwide have begun to lay the foundation for what responsible AI looks like and how it will be governed across markets. In our view, every company with an AI strategy will need a corresponding strategy for AI governance and safety—and we see a new tech stack emerging to serve that need.

The Current State of AI Regulation

But how are regulators actually thinking about AI oversight? Officials in the EU are moving quickly and leading the charge with the AI Act, rumored to go into effect in 2024. The EU AI Act authors propose a variable, risk-based approach by imposing more “enhanced oversight” on “high-risk” AI systems and only “limited transparency obligations” for “non-high-risk” systems. Thorough documentation and visibility into AI systems has been the focus of the EU AI Act to better understand systems’ design, purpose and risk mitigation strategies. The EU AI Act is also setting the bar for violation consequences with a fine of the higher of €20M or 4% of annual revenue.

By contrast, the U.S. today lacks a federal regulatory framework for AI. Despite the lack of federal oversight, many of the companies we’ve spoken with follow the voluntary AI Risk Management Framework provided by the National Institute of Standards and Technology. In the absence of mandatory regulation from federal officials, local and state governments are stepping in to fill the gap: New York City’s Local Law 144, effective since July 2023, mandates third-party bias audits for automated-hiring systems, presenting a more technical approach than the EU’s documentation-centric approach.

It is becoming clear that many businesses are beginning to think proactively about risk and compliance controls around AI use, even as U.S. federal regulation remains in development.

So, why act now? AI that is not developed with guardrails in mind can create critical issues for companies far downstream from initial development. In addition to the financial consequences that will soon be posed by regulation, companies face significant business and brand risk for AI misuse. For example, AI that uses personally identifiable information (PII) or biometric data necessitates significant trust from customers, as does AI that is used in medical diagnostics or underwriting. Misusing AI in these applications could not only lead to brand damage, but could adversely affect consumers.

The Emerging AI Compliance and Governance Tech Stack

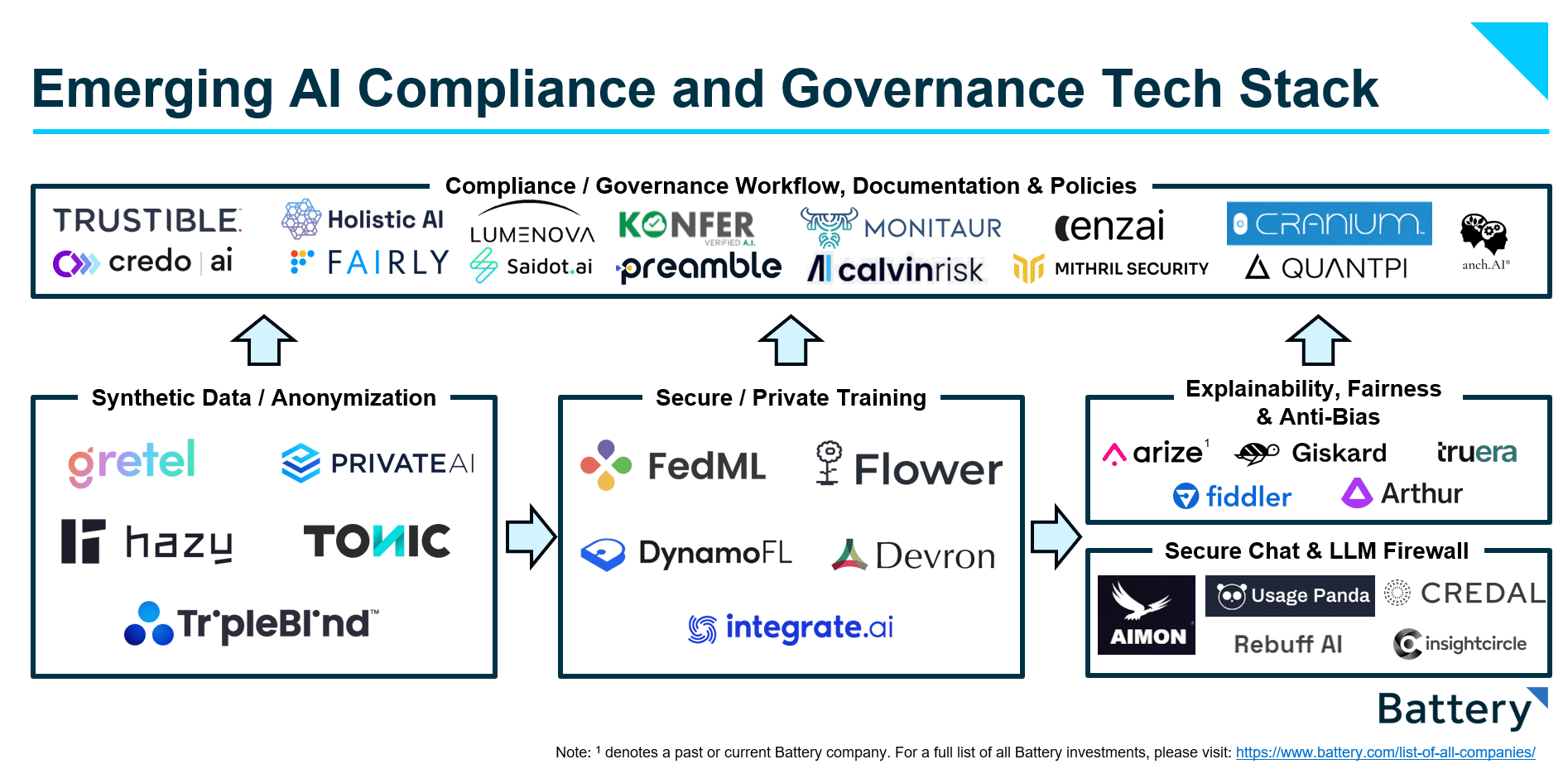

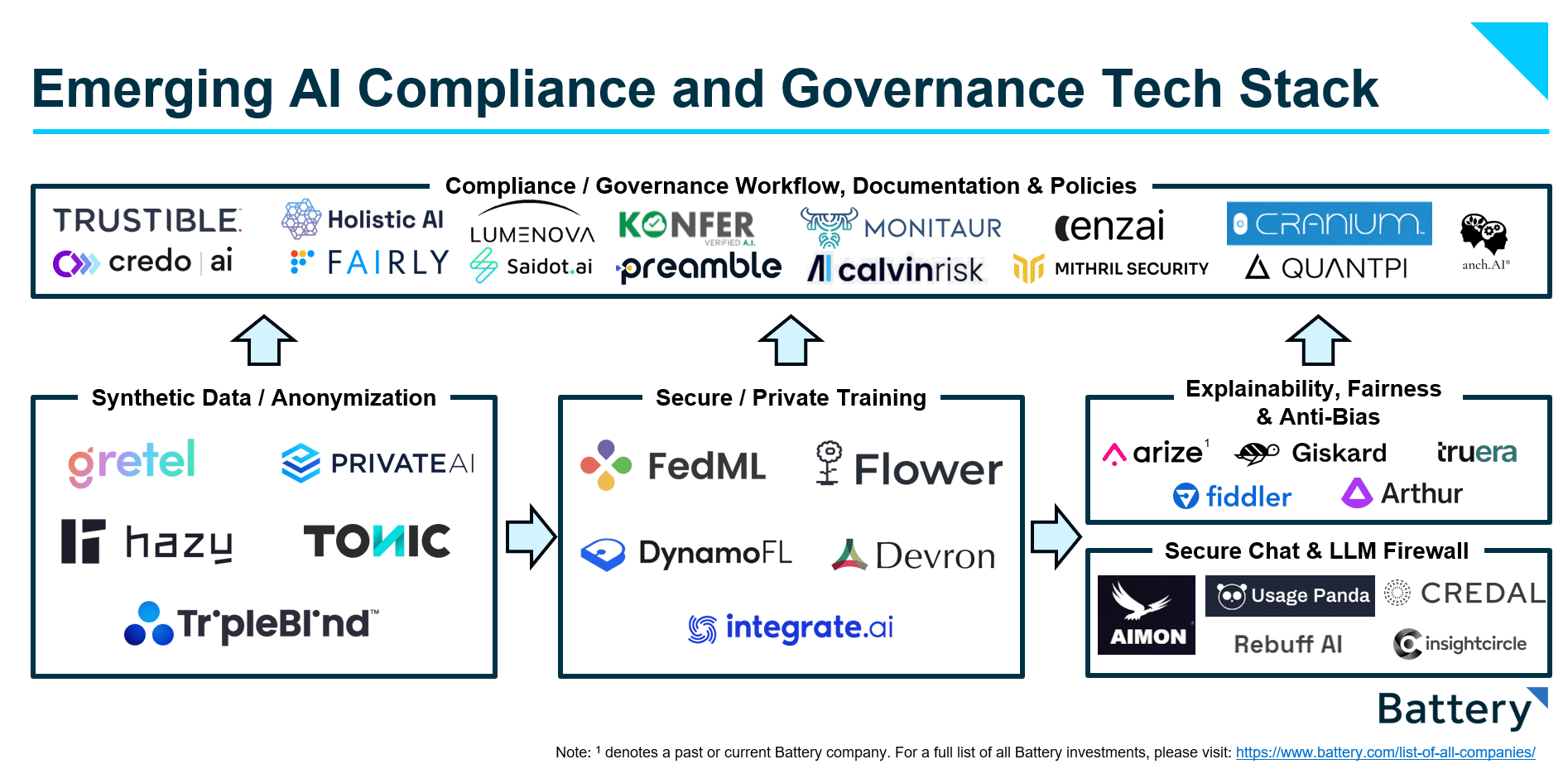

To address these oncoming AI regulations, we’ve seen an emerging compliance and governance tech stack that enables companies to build, deploy and manage compliant and transparent AI systems. The ML model-development process has evolved from a purely engineering problem to one that involves a variety of stakeholders, as the use of AI comes under increased scrutiny from executives, regulators and customers alike.

We view this AI compliance and governance tech stack as two separate, but interdependent, pieces:

- Machine Learning (ML) Stack– This technology targets technical stakeholders (ML engineers, data scientists) who are responsible for building the models and infrastructure needed to deliver safe AI systems.

- Governance / Compliance Workflow Layer – This software targets the non-technical stakeholders (compliance, legal, executives, regulators) who are responsible for the business and regulatory alignment of AI systems.

The Machine Learning Infrastructure Stack

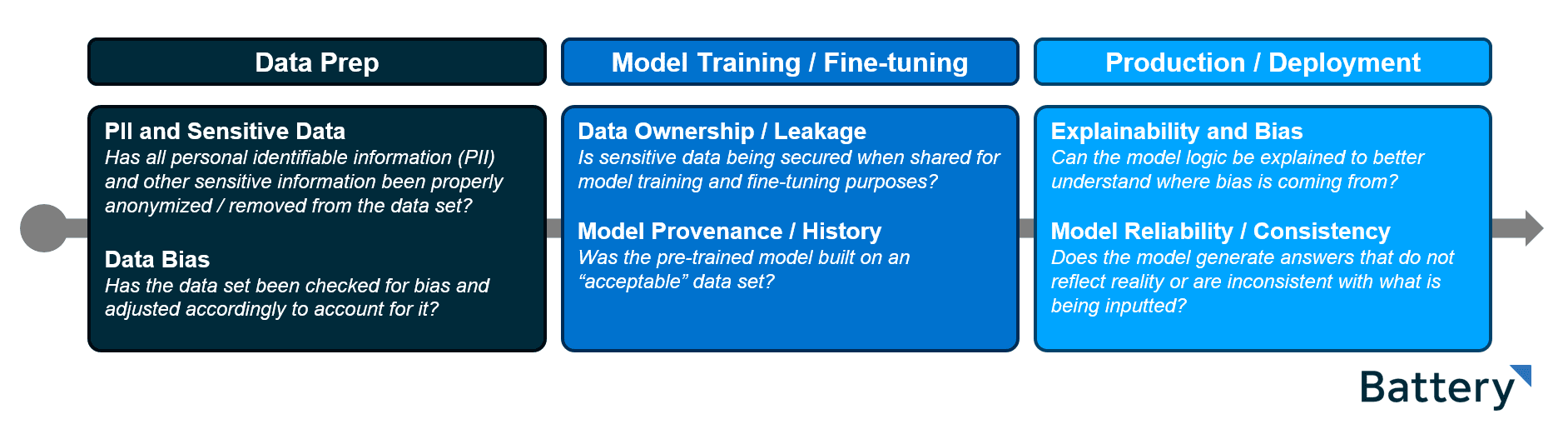

AI risks are introduced across every stage of the ML lifecycle. This means that models must be intentionally designed, trained and deployed from the ground up in a safe and compliant way.

At Battery, we break down the ML lifecycle into three discrete steps, each with its own set of compliance and governance risks:

Based on our exploration of the space, we’ve defined four buckets to encompass emerging tooling:

- Synthetic Data / Anonymization (Data Prep) – Helping teams remove the PII and sensitive data from their dataset and augment it with synthetic data to remove bias or replace redacted content.

- Secure / Private Training (Model Training / Fine-tuning) – Leveraging a variety of techniques like federated learning and differential privacy to help companies safely train their own models and fine-tune pre-trained models.

- Explainability, Fairness and Anti-Bias (Production / Deployment) – Evaluating and monitoring models in production to identify bias and drift, as well as bringing transparency into the logic behind the models.

- Secure Chat and LLM Firewall (Production / Deployment) – Building a wrapper or private instance of LLM deployments to fully vet the inputs and outputs of the model to meet compliance requirements. (This is a space that we have found to overlap in a nuanced manner with security, which we’re more than excited to chat about if you’re building in the space.)

The Governance / Compliance Workflow Layer

In addition to safeguards built into the ML stack, we are predicting the rise of a compliance / governance workflow layer that is purpose-built for non-technical business users managing AI within organizations. These platforms act as systems of record to document, manage and provide visibility into a business’s AI inventory, AI use cases and project stakeholders. Many of these platforms offer out-of-the-box guardrails that map to existing and emerging regulations to ensure businesses are meeting the documentation requirements set by regulators.

These are the platforms for internal documentation and reporting of AI use and bridge the knowledge gap between the technical teams building AI systems and the non-technical business teams managing them. Translating bottom-up model metrics and artifacts into simple and understandable reports for non-technical stakeholders is how governance and compliance platforms will build transparency and buy-in inside and out of their organizations.

Conclusion & Market Opportunity

Overall, we believe that the AI governance software stack we’ve outlined represents an exciting emerging software category and a large market opportunity. Trust and transparency are key. We think that companies will continue to adopt software that helps them ensure their AI is safe, compliant, and transparent. We are excited to see this market develop and are keeping a close ear to the ground. If you are building or investing in AI governance, please reach out!

*Denotes a Battery portfolio company. For a full list of all Battery investments, please click here.

The information contained herein is based solely on the opinions of Dharmesh Thakker, Dallin Bills, Jason Mendel, Patrick Hsu and Adam Piasecki and nothing should be construed as investment advice. This material is provided for informational purposes, and it is not, and may not be relied on in any manner as, legal, tax or investment advice or as an offer to sell or a solicitation of an offer to buy an interest in any fund or investment vehicle managed by Battery Ventures or any other Battery entity.

The information and data are as of the publication date unless otherwise noted. Content obtained from third-party sources, although believed to be reliable, has not been independently verified as to its accuracy or completeness and cannot be guaranteed. Battery Ventures has no obligation to update, modify or amend the content of this post nor notify its readers in the event that any information, opinion, projection, forecast or estimate included, changes or subsequently becomes inaccurate.

The information above may contain projections or other forward-looking statements regarding future events or expectations. Predictions, opinions and other information discussed in this video are subject to change continually and without notice of any kind and may no longer be true after the date indicated. Battery Ventures assumes no duty to and does not undertake to update forward-looking statements.

A monthly newsletter to share new ideas, insights and introductions to help entrepreneurs grow their businesses.